All Roads Lead to Rome: The Machine Learning Job Market in 2022

In the future, every successful tech company will use their data moats to build some variant of an Artificial General Intelligence. Plus some updates on my career.

I was on the job market recently, open to suggestions on what I should do next. I’m deeply thankful to all those who reached out and pitched their companies, and also to people who shared their wisdom of how they wanted to navigate their own careers.

I’m pleased to say that I’ve joined Halodi Robotics as their VP of AI, and will be hiring for the Bay Area office. We’ll be doing some real robot demos this week near Palo Alto, so please get in touch with me if you’re interested in learning about how we plan to create customer value with deep learning on humanoid robots (1 year), and then solve manipulation (5 years), and then solve AGI (20 years).

I suspect that there are many other deep learning researchers in the “2015-2018 vintage” that are contemplating similar career moves, so I’ll share what I learned in the last month and how I made my career decision in the hopes that this is useful to them. I think that in the next decade we’ll see a lot of software companies increasingly adopt an “AGI strategy” as a means to make their software more adaptive and generally useful.

Options Considered

My only constraints were that I wanted to continue to apply my ML skills at my next job. Here are the table of options I considered. I had chats with directors and founders from each of these companies, but I did not initiate the formal HR interview process with most of them. This is not intended as a flex; just the options I considered and my perceived pros and cons. I’m not like one of those kids that gets into all the Ivy League schools at once and gets to pick whatever they want.

These are subjective opinions: a mere snapshot of what I believe are the strengths and weaknesses of each option in April 2022. In the hype-driven Silicon Valley, the perceived status of a company can go from rags to riches back to rags within a few years, so this table will most certainly age poorly.

| Option | Pros | Cons |

| FAANG+similar | Low 7 figures compensation (staff level), technological lead on compute (~10 yr), unit economics of research is not an issue | Things move slower, less autonomy, OKRs etc. |

| Start my own company | Maximally large action space, blue check mark on Twitter | I’m more interested in solving AGI than solving customer problems |

| OpenAI | Technological lead on LLMs (~1 yr) + an interesting new project they are spinning up | Culture and leadership team seem to be already established |

| Large Language Model Startup | Strong teams, transform computing in <10 years, iterate quickly on LLM products | Competing with FAANG + OpenAI on startup budget; unclear whether LLMs will be defensible technology on their own |

| Tesla | Tesla Bot, technological lead on data engine (~2 yrs), technological lead on manufacturing (~10yrs) | No waiting in line for coffee |

| Robotics Startups (including Halodi) | Huge moat if successful, opportunity to lead teams. Halodi has technological edge on hardware (~5yrs) | Robotics research is slow, Robotics startups tend to die |

| ML + Healthcare Startups | Lots of low-hanging fruit for applying research; meaningfully change the world | Product impact is even slower than robotics due to regulatory capture by hospitals and insurance companies. 10 years before the simplest of ML techniques can be rolled out to people. |

| Startups working on other Applications of ML | Lots of low-hanging fruit + opportunity to lead teams | I’m more interested in solving AGI than solving customer problems |

| Crypto + DeFi | Tokenomics is interesting. 60% annual returns at Sharpe 3+ is also interesting. | Not really an AGI problem. Crypto community has weird vibes |

Technological Lead Time

The most important deciding factor for me was whether the company has some kind of technological edge years ahead of its competitors. A friend on Google’s logging team tells me he’s not interested in smaller companies because they are so technologically far behind Google’s planetary-scale infra that they haven’t even begun to fathom the problems that Google is solving now, much less finish solving the problems that Google already worked on a decade ago.

In the table above I’ve listed companies that I think have unique technology edges. For instance, OpenAI is absolutely crushing it at recruiting right now because they are ahead in Large Language Model algorithms, probably in the form of trade secrets on model surgery and tuning hyperparameters to make scaling laws work. OpenAI has clearly done well with building their technical lead time, despite FAANG’s compute superiority.

Meanwhile, the average machine learning researcher at FAANG has a 15 year lead-time in raw compute compared to a PhD student, and Google and DeepMind have language models that are probably stronger than GPT-3 on most metrics. There are cases where technological lead on compute is not enough; some researchers left Google because they were unhappy with all the red tape they had to go through to try to launch LLM-based products externally.

I seriously considered pivoting my career to work on generative models (i.e. LLMs, Multimodal Foundation Models), because (1) robotics is hard (2) the most impressive case studies in ML generalization always seem to be in generative modeling. Again, think to technological lead times - why would any machine learning researcher want to work at something that isn’t at the forefront of generalization capability? However, the pure-generative modeling space feels a bit competitive, with everyone fighting to own the same product and research ideas. The field would probably evolve in the same way with or without me.

Having futuristic technology is important for recruiting engineers because many of them don’t want to waste years of their life building a capability that someone else already has. To use analogies from other fields of science, it would be like a neuroscience lab trying to recruit PhD students to study monkey brains with patch-clamp experiments when the lab next door is using optogenetic techniques and Neurallink robots. You could reinvent these yourself if you’re talented, but is it worth spending precious years of your life on that?

Of course, companies are not the same thing as research labs. What matters more in the long run is the product-market fit and the team’s ability to build future technological edge. Incumbents can get bloated and veer off course, while upstarts can exploit a different edge or taking the design in a unique direction. Lots of unicorn companies were not first-movers.

Why not start your own company?

Being a Bay Area native, I thought my next job would be to start my own company around MLOps. I wanted to build a really futuristic data management and labeling system that could be used for AGI + Active Learning. Three things changed my mind:

First, I talked to a bunch of customers to understand their ML and data management needs to see if there was product-market fit with what I was building. Many of their actual problems weren’t at the cutting edge of technology, and I simply couldn’t get excited about problems like building simulators for marketing campaigns or making better pose estimators for pick-and-place in factories or ranking content in user feeds. The vast majority of businesses solve boring-but-important problems. I want my life’s work to be about creating much bigger technological leaps for humanity.

Secondly, I think it’s rare for CEOs to contribute anything technically impressive after their company crosses a $100M valuation. If they do their job well, they invariably spend the rest of my life dealing with coordination, product, and company-level problems. They accumulate incredible social access and leverage and might even submit some code from time to time, but their daily schedule is full of so much bullshit that they will never productively tinker again. This happens to senior researchers too. This is profoundly scary to me. From Richard Hamming’s You and Your Research: “In the first place if you do some good work you will find yourself on all kinds of committees and unable to do any more work”

Legend has it that Ken Thompson wrote the UNIX operating system when his wife went on a month-long vacation, giving him time to focus on deep work. The Murder of Wilbur writes, How terrifying would it be if that was true? Is it possible that Thompson was burdened by responsibilities his entire life, and then in a brief moment of freedom did some of the most important work anyone has ever done?

Thirdly, Halodi has built pretty awesome technology and they’ve given me a rare opportunity to live in the future, building on top of something that is 5+ years ahead of its time. I’m very impressed by Bernt’s (the CEO) respect for human anatomy: from the intrinsic passive intelligence of overdamped systems that makes us able to grasp without precise planning, to the spring systems in our feet that let us walk across variable terrain while barely expending energy. We both share the belief that rather than humanoid robots being “overkill” for tackling most tasks, it is the only form that can work when you want to design the world around humans rather than machines.

All Roads Lead to Rome

A few months ago I asked Ilya Sutskever whether it made more sense to start a pure-play AGI research lab (like OpenAI, DeepMind) or to build a profitable technology business that, as a side effect, would generate the data moat needed to build an AGI. In his provocative-yet-prescient fashion, Ilya said to me: “All Roads Lead to Rome - Every Successful (Tech) Company will be an AGI company”.

This sounds a bit unhinged at first, until you remember that repeatedly improving a product by the same delta involves exponentially harder technology.

- In semiconductor manufacturing, shrinking from 32nm to 14nm process nodes is pretty hard, but going from 14nm to 7nm process nodes is insanely hard, requiring you to solve intermediate problems like creating ultrapure water.

- Creating a simple Text-to-Speech system for ALS patients was already possible in the 1980s, but improving pronunciation for edge cases and handling inflection naturally took tremendous breakthroughs in deep learning.

- A decent character-level language model can be trained on a single computer, but shaving a few bits of entropy off conditional character modeling requires metaphorically lighting datacenters on fire.

- Autonomous highway driving is not too hard, but autonomously driving through all residential roads at a L5 level is considered by many to be AGI-complete.

In order to continue adding marginal value to the customer in the coming decades, companies are going to have to get used to solving some really hard problems. Perhaps eventually everyone converges to solving the same hard problem, Artificial General Intelligence (AGI) just so they can make a competitive short video app or To-Do list or grammar checker. We can quibble about what “AGI” means and what time frame it would take for all companies to converge to this, but I suspect that Foundation Models will soon be table stakes for many software products. Russell Kaplan has shared some similar ideas on this as well.

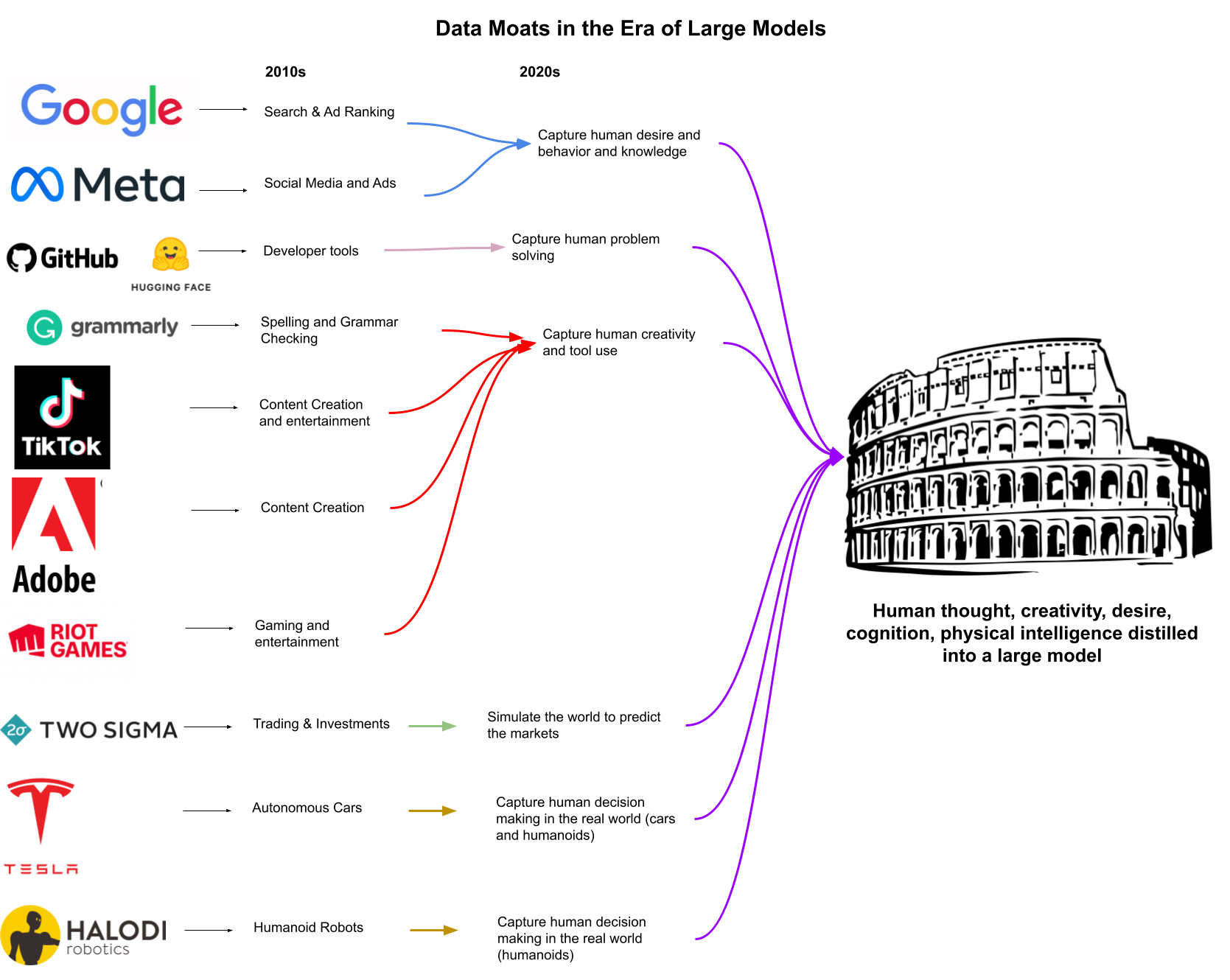

I also wonder if in a few years, expertise on losslessly compressing large amounts of Internet-scale data will cease to become a defensible moat between technologically advanced players (FAANG). It therefore makes sense to look for auxiliary data and business moats to stack onto large-scale ML expertise. There are many roads one can take here to AGI, which I have sketched out below for some large players:

For instance, Alphabet has so much valuable search engine data capturing human thought and curiosity. Meta records a lot of social intelligence data and personality traits. If they so desired, they could harvest Oculus controller interactions to create trajectories of human behavior, then parlay that knowledge into robotics later on. TikTok has recommendation algorithms that probably understand our subconscious selves better than we understand ourselves. Even random-ass companies like Grammarly and Slack and Riot Games have a unique data moats for human intelligence. Each of these companies could use their business data as a wedge to creating general intelligence, by behavior-cloning human thought and desire itself.

The moat I am personally betting on (by joining Halodi) is a “humanoid robot that is 5 years ahead of what anyone else has”. If your endgame is to build a Foundation Model that train on embodied real-world data, having a real robot that can visit every state and every affordance a human can visit is a tremendous advantage. Halodi has it already, and Tesla is working on theirs. My main priority at Halodi will be initially to train models to solve specific customer problems in mobile manipulation, but also to set the roadmap for AGI: how compressing large amounts of embodied, first-person data from a human-shaped form can give rise to things like general intelligence, theory of mind, and sense of self.

Embodied AI and robotics research has lost some of its luster in recent years, given that large language models can now explain jokes while robots are still doing pick-and-place with unacceptable success rates. But it might be worth taking a contrarian bet that training on the world of bits is not enough, and that Moravec’s Paradox is not a paradox at all, but rather a consequence of us not having solved the “bulk of intelligence”.

Reality has a surprising amount of detail, and I believe that embodied humanoids can be used to index that all that untapped detail into data. Just as web crawlers index the world of bits, humanoid robots will index the world of atoms. If embodiment does end up being a bottleneck for Foundation Models to realize their potential, then humanoid robot companies will stand to win everything.

Want Intros to ML Startups?

In the course of talking to many companies and advisors over the last month, I learned that there are so, so many interesting startups tackling hard ML problems. Most of them are applied research labs trying to solve interesting problems, and a few of them have charted their own road to Rome (AGI).

Early in your career it makes a lot of sense to surround yourself with really great mentors and researchers, such as that of an industry research lab. Later on, you might want to bring your experience to a startup to build the next generation of products. If this describes you, I’d be happy to connect you to these opportunities - just shoot me an email with (1) where you are on the pure research vs. applied research spectrum (2) what type of problems you want to work on (Healthcare, Robotics, etc.) (3) the hardest you ever worked on a project, and why you cared about it (4) your resume. If you have the skill set I’m looking for, I may also recruit you to Halodi 😉.

Honest Concerns

I have some genuine concerns with Halodi (and AGI startups in general). History tells us the mortality rate of robotics companies is very high, and I’m not aware of any general-purpose robot company that has ever succeeded. There is a tendency for robotics companies to start off with the mission of general-purpose robots and then rapidly specialize into something boring as the bean counters get impatient. Boston Dynamics, Kindred, Teleexistence - the list goes on and on. As in business and life, the forces of capitalism and evolution conspire to favor specialization of hardware over generalization of intelligence. I pray that does not happen to us.

I’m reminded of Gwern’s essay on timing: Launching too early means failure, but being conservative & launching later is just as bad because regardless of forecasting, a good idea will draw overly-optimistic researchers or entrepreneurs to it like moths to a flame: all get immolated but the one with the dumb luck to kiss the flame at the perfect instant, who then wins everything, at which point everyone can see that the optimal time is past.

But I also remind myself of what Richard Hamming said about Claude Shannon:

“He wants to create a method of coding, but he doesn’t know what to do so he makes a random code. Then he is stuck. And then he asks the impossible question, ‘What would the average random code do?’ He then proves that the average code is arbitrarily good, and that therefore there must be at least one good code. Who but a man of infinite courage could have dared to think those thoughts?”

Life is too short to attempt anything less than that which takes infinite courage. LFG.